Camera

It's only USB 1.1, which is troublesome because it means that images have to be sent in compressed form in order for us to have the image rates we need (approximately 25fps). I would have preferred USB 2.0, but 1.1 seems to do the trick.

Along the way, I discovered that you could focus webcams. I assumed that they were like disposable cameras, one size fits all, but, nope, it turns out they can, and must be, focused. Chalk one up to experience.

One problem with a camera is that there is a certain lag between reality and when the computer receives the image. To be fair, this lag exists for everything in the world, from your eyes to your fingers to highly sensitive nuclear sensors, but with webcams it's particularly noticeable. You can see them yourself by plugging in a webcam to your computer and then waving your hand in front of it. You'll notice that there's a lag between when you move your hand and when you see the movement on the screen. Even more annoying is that we don't know how much lag occurs and when. It's an interesting problem.

This lag occurs at multiple levels. First, there is the shutter exposure time itself, which can reach 40ms. Then there is a latency between when the camera takes the photo and when it is sent to the computer. This latency is unknown, and without access to the drivers of the TouCam, cannot be precisely estimated. However, it's reasonable to suppose that it's on the order of several if not tens of milliseconds. This extra time is probably the result of compressing the data.

Next, there is a transmission delay from the bus. When you consider that the maximum USB 1.1 can support is 11Mbps, and at 40fps in 640x480 resolution the bus is saturated, you realize that this sort of transmission must take from 20 to 40ms. Which is quite a long time, considering that our sample rate is 40ms. This means that at the least, we're always lagging real-time by one photo just because of compression and data transmission. Of course, in reality I use a 320x240 resolution, which means that the bus only takes 5 to 10ms for each photo, under ideal conditions. It's still relatively a long time.

After the bus, you get into the real latencies: the operating system. Windows is what's known as a “soft real-time” O/S, meaning that things don't occur in real-time, but they happen close enough that the user doesn't usually notice. We're not your average user, though, and these sort of differences are easily noticed.

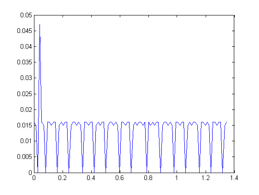

Basically, Windows buffers everything that comes in over USB until it finds a moment to send the data to where it needs to go. This can take a varying amount of time, depending on what else is going on. Major system interrupts seem to happen on the order of once every 100ms, completely halting all other process. This is shown in the image and is quite bothersome.

After finding this page on using oscilloscopes to measure shutter speed, I did a few tests along similar lines and discovered that the camera sensor was actually quite accurate time-wise, although the time between when the picture was taken and when it arrived on the PC was quite different, and not always uniform. This leads to the interesting problem of how to determine at which precise instant a photo was taken.

In the end, I couldn't find any way to do it that would have been easy. The best idea I came up with is a row of lights that lights up like an oscilloscope and use image analysis to determine when a photo was taken. This is a little outside of my competencies, though, and I didn't really have the time to implement it. I'm quite certain that it will work though with a future model of the table where everything is so firmly screwed together that I won't have to worry about calibrating the table every 5 minutes, which is currently the case.

In our most recent table, we bought a firewire industrial camera, one that uses a trigger signal that allows us to sync the camera and computer together. We can know with microsecond precision when the image was taken. However, these kinds of cameras are very expensive, so for general use I don’t recommend this.

There is another another avenue to explore, though: DV cameras. DV cameras can be had in America right now quite cheaply (<$200 on ebay) and are quite reliable. Even better, they use the firewire instead of USB, which means that the latency is far lower and far more consistent. Lastly, the optics are usually quite good, especially compared to those of a webcam. We have yet to do any tests, but in theory it should work right off the bat, with Linux as well as Windows.

However, be very careful about what you buy. If it is an NTSC camera, it is not suitable for this task because the interlacing makes for very poor and blury motion. If the camera has a PAL mode it should work better. Still, we prefer to wait and revisit this idea in a few years when progressive HD cameras reach the market at cut rates. Until then, firewire webcams seem to be the best.

Camera Calibration

The camera is quite easy to calibrate. I simply place a sheet of A4 paper on the table, click on it's four corners, and, knowing it's exact dimensions, calculate the pixels-to-meters ratio. The camera calibration code is here.